Sneak peek: Adobe’s Project Artistic Scenes

Adobe just previewed two interesting experimental AI-powered technologies for editing 3D scenes during the Sneaks session at Adobe MAX 2022, its annual user conference.

Project Artistic Scenes stylises 3D scenes, changing their lighting and textures to match the look of a 2D reference image, while Project Behind the Seen turns single photos in to 360-degree environment maps.

Check out experimental new technologies that may soon appear in Adobe software

One of the annual highlights of Adobe MAX, Sneaks gives Adobe to showcase its blue sky research.

This year’s session showcased 10 new AI-based graphics technologies, for tasks ranging from type design to video editing, but below, we’ve picked out two particularly relevant to CG Channel readers.

While both are research projects, and are not guaranteed to be used in Adobe’s software, Sneaks do often make their way into commercial products quite quickly.

One of the highlights of the 2020 Sneaks session, physics-based scene layout system Physics Whiz became part of new layout and rendering tool Substance 3D Stager, released less than a year later.

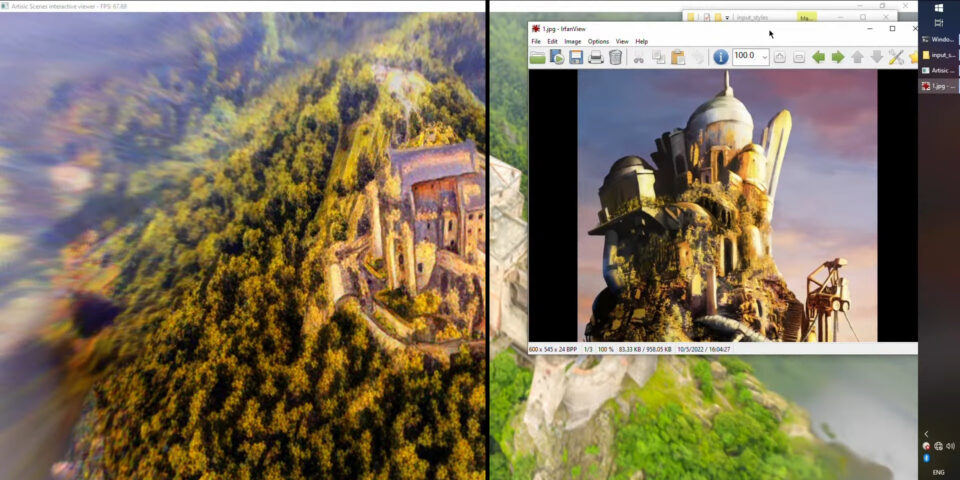

Project Artistic Scenes transfers the look of a source image (right, inset) to a 3D scene (right, main image), changing its lighting and textures, as shown in the image on the left.

Project Artistic Scenes: transfer the visual style of an image to a 3D scene

The idea of style transfer – transferring the visual style of one image to another – isn’t a new one.

Lots of 2D image-editing applications, including Adobe’s own Photoshop, include style transfer filters, and it’s even possible to transfer the look of a still image to video footage.

Project Artistic Scenes applies the same concept to 3D, changing the textures and lighting of a 3D scene to match that of a 2D reference image.

It first reconstructs a 3D scene from a series of source photos – it isn’t clear from the Sneaks session whether this is a necessary step of the process, or whether it would also work with an imported 3D model.

Users can then drag in a reference image to apply its visual style to the 3D scene.

Style is defined fairly broadly: with a pencil sketch Project Artist Scenes gives the reconstructed scene a monochrome look, but not one that really preserves the individual character of the source image.

However, it does a good job transferring the colour palette and overall mood of the source image, as in the example above, where a sci-fi concept image is applied to a 3D reconstruction of a real castle.

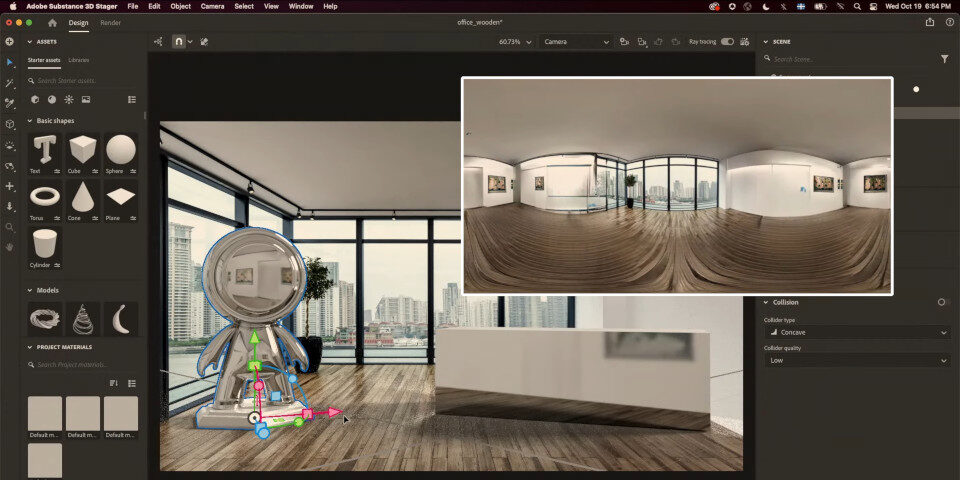

Project Beyond the Seen creates a 360-degree panorama (inset) from a single source photo – the backplate in this Substance 3D Stager scene – that can be used to generate reflections on 3D objects like the statue.

Project Beyond the Seen: turn a single photo into a 360-degree environment map

In constrast, Project Beyond the Seen is geared towards photorealistic imagery.

The project turns a single source photo into a 360-degree environment, using AI to fill in the missing parts of the scene behind the viewpoint of the photographer.

Although Adobe suggests that it could be used to generate 3D environments for virtual or augmented reality projects, there are noticeable distortions and artefacts that would be distracting in mixed reality.

A more likely early use case would be to generate environment maps for 3D scenes: the demo shows one of the environments that Project Beyond the Seen generates being applied as a light in Substance 3D Stager.

While the image being applied is a JPEG, not a HDR, it generates plausible reflections on the 3D objects in the scene.

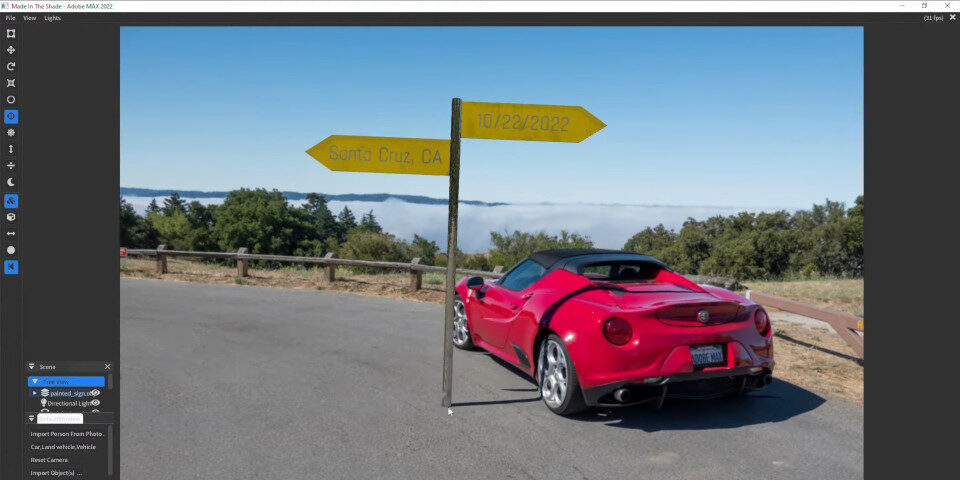

Project Made in the Shade automatically generates new shadows during image editing: either for 3D models dropped into the photo, as shown above, or when repositioning elements of the original 2D image.

Other highlights: neat new AI-powered technologies for editing photos and creating composites

The Sneaks session also previewed a couple of interesting image-editing technologies that seem good fits for like Adobe applications like Photoshop or Lightroom.

Project Clever Composites speeds up the process of compositing elements from one image into another, automatically isolating the target object from its background, scaling it to match its new background, and generating appropriate shadows.

Project Made in the Shade does a similar job with 3D objects, automatically rescaling a 3D model to match a background image, then generating realistic shadows across existing objects in the photo.

More impressively, it enables users to isolate foreground objects from the photograph and reposition them within the image, automatically generating new shadows for them in real time.

You can find more information about both projects – and all of the other new technologies shown off during the 2022 Sneaks session – via the Adobe blog post linked below.

Read more about Adobe MAX 2022’s Sneaks session on Adobe’s blog